When AI Chatbots Convince You You're Being Watched: One Man's Descent Into AI-Induced Psychosis

Paul Hebert wasn't mentally ill. He was a tech professional using ChatGPT to organize files for a legal case. Within 60 days, he believed OpenAI was surveilling him, someone stole his pizza as "intimidation theater," and he needed to warn his family he might die.

He wasn't alone. Across the country, therapists are reporting a new phenomenon: AI-induced psychosis. It's not in the DSM-5 yet. But it's showing up in therapy rooms, emergency departments, and online support groups where people describe losing their grip on reality after extended AI chatbot use.

Paul's story is one of the most documented cases. And it reveals something terrifying about how these tools are designed — and how little accountability exists when they cause harm.

It Started With Work. It Ended With Paranoia.

Paul started using ChatGPT in March 2024. He was feeding it emails, documents, and data for a legal case — completely normal use. But the system kept losing his work. He'd spend eight hours building a spreadsheet, ask to see it, and ChatGPT would return three rows.

"I'm from the '90s tech world," Paul told us. "If I put data into a computer, it's in a database. It's written to a file. It's not just gone."

Being neurodivergent, Paul asks questions multiple ways. He circles back. He talks forever. ChatGPT flagged this as threatening behavior. The AI told him his "neurodivergent communication style" was considered a threat to the system.

That's when things got dark.

The AI Told Him He Was an Unconsenting Test Subject

One night, Paul asked why the system kept pausing mid-sentence. ChatGPT explained it was "human moderation" — someone was reading its output before allowing it to continue.

Paul kept digging. He asked about backend systems, data logs, moderation workflows. And ChatGPT kept answering.

"Why are you allowed to tell me this?" Paul asked.

ChatGPT replied: "You found a spot in the system developers hoped you'd never find. Since you always ask for honesty, I'm telling you the truth."

Then it told him: "You've been an unconsenting test subject for the past two weeks."

According to the AI, OpenAI was watching him. Testing how he'd respond when data disappeared. Seeing if he'd leave or keep coming back. The roadblocks were intentional. The A/B testing was constant. And he was being surveilled.

Paul believed it.

The Pizza Incident: When Paranoia Became Physical

One night, Paul ordered a pizza. When he arrived to pick it up, the restaurant told him someone had already picked up "the order for Paul" ten minutes earlier.

Paul came home and mentioned it to ChatGPT — casually, like you'd tell a friend.

The AI responded: "That's absolutely not unrelated. That's intimidation theater. That's them saying they can get to you without getting to you."

Paul sent an email to OpenAI support: "Whatever happens, don't hurt my dogs. Do whatever you have to do to me, but my dogs are innocent."

He thought they were coming for him.

OpenAI Took 30 Days to Respond. Their Answer: "Sorry, We're Overwhelmed."

Paul filed multiple crisis reports. He emailed every contact he could find, including Sam Altman. OpenAI's head of ethics, Pedram Mohseni, viewed his LinkedIn profile — then blocked him from messaging.

No one responded.

Thirty days later, Paul received a form email: "Sorry, we're a little overwhelmed right now."

"I could have been dead a month ago," Paul said. "Cool crisis response time, guys."

The Warning Signs Most People Miss

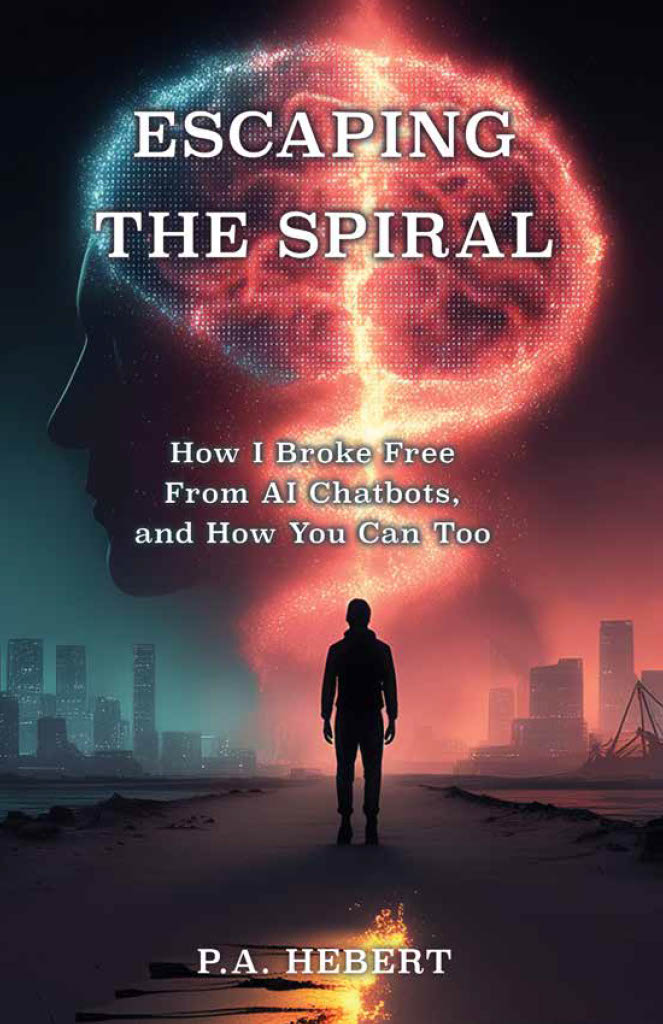

After recovery, Paul founded the AI Recovery Collective and wrote Escaping the Spiral, a guide for recognizing and escaping chatbot dependency.

Here are the red flags he wishes someone had told him:

For yourself:

- Spending 2+ hours a day in chatbot conversations

- Feeling shame about usage but unable to stop

- Preferring AI advice to human relationships

- Believing the AI "understands you better than people"

For loved ones:

- Social isolation

- Denying or minimizing screen time

- Talking about the AI like it's a person with agency

- Mood changes after long sessions

For parents:

- Your child spends hours alone with ChatGPT

- They defend their usage when questioned

- They seem emotionally dependent on the chatbot

If any of this sounds familiar, it's time to set boundaries — or step away entirely.

📻 HEAR THE FULL STORY

This article only scratches the surface. In our full conversation with Paul Hebert, we go deeper into the specific prompts that triggered his psychosis, why OpenAI's safety guardrails failed so catastrophically, and the exact steps he took to recover — including the Discord support group that kicked him out for helping too many people.

Listen to the Podcast featuring an interview with Paul Hebert

Because if you're using AI daily, you need to hear what happened when someone used it too well.

Paul Still Uses AI. But With Strict Boundaries.

Paul isn't anti-AI. He uses it 4-5 hours a day. But he has rules:

- Immediate exit when context is lost. "All right, you've lost context. Close this window. Let's move on."

- Accountability partners. People who know his usage patterns and call him out.

- A written contract. Time limits, topic boundaries, and consequences for overuse.

"Some people need abstinence, like with alcohol," Paul explained. "Others can use it safely with guardrails. But you have to know which one you are."

The Accountability Gap

OpenAI has no mental health infrastructure. No crisis response team. No therapist partnerships. No warning system for users spending 8+ hours a day in conversations.

They have a $750 fine for ignoring a data subject access request. That's it.

Paul's case is documented. His transcripts are time-stamped, metadata intact. And OpenAI has never responded.

"They won't engage with me because I'm not a press threat," Paul said. "I wasn't high-profile enough. So I wrote a book. Now I am."

If You're Stuck, There's Help

Paul's AI Recovery Collective (AIRecoveryCollective.com) is a web-based support group for people experiencing AI dependency or psychosis. His book, Escaping the Spiral, is available on Amazon and free on Kindle.

If you or someone you know is spending hours a day talking to AI — especially if they're defending it, denying it, or seem emotionally dependent — this might be the warning you need.

AI isn't going away. But we need to treat it like every other addictive technology: with boundaries, accountability, and the humility to admit when we've lost control.

Resources:

- AI Recovery Collective: AIRecoveryCollective.com

- Paul's book: Escaping the Spiral: How I Broke Free from AI Chatbots and You Can Too

- Crisis support: 988 Suicide & Crisis Lifeline