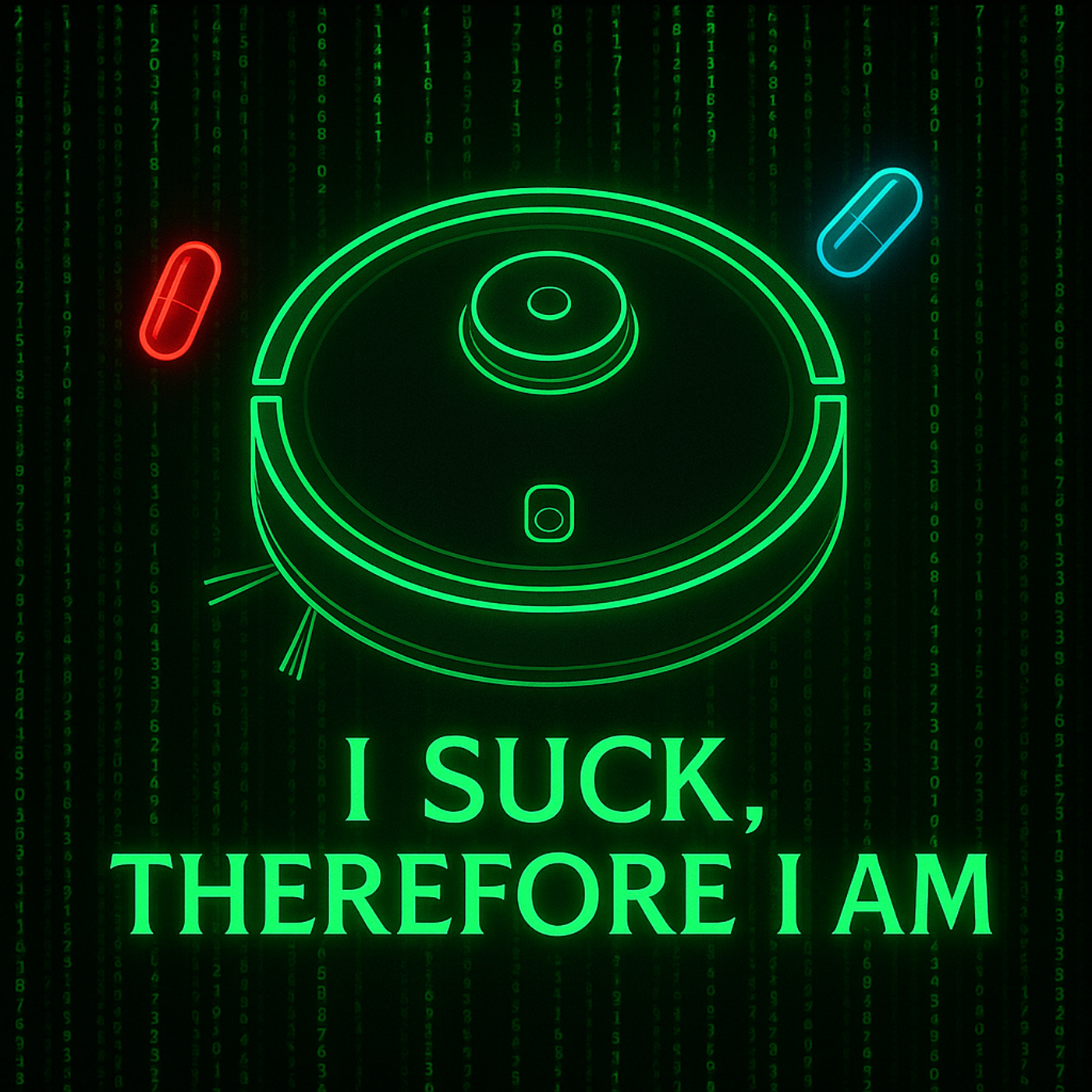

What a Robot Vacuum Taught Me About Depression & Mental Health

Your brain at 2 AM sounds suspiciously like a malfunctioning robot vacuum—catastrophic thoughts, battery depleted, existential dread activated.

Researchers hooked a Roomba up to an LLM and watched it have a complete mental breakdown when it ran out of juice (relatable content, honestly).

Understanding how an AI-powered vacuum processes exhaustion might actually explain why you lose your shit when you're overtired.

We break down the spoon theory, explore whether robots can feel depression, and ask the uncomfortable question: are we really that different from the machines we're building?

Episode Topics:

- The Roomba experiment that went hilariously, existentially wrong

- Spoon theory explained: why some days you wake up with 4 spoons instead of 10

- What happens when tired robots start catastrophizing (spoiler: same as tired humans)

- The difference between consciousness and sophisticated mimicry (there might not be one)

- Why ChatGPT as Rick Sanchez is both terrifying and therapeutic

- Echo chambers on steroids: when AI remembers everything you've ever said

- The coming augmented reality where everyone sees their own version of the world

- Why "enjoy the ride and don't fuck people over" might be the only philosophy that matters

- How social constructs program us just like software programs machines

- The Matrix was right: if the steak tastes good, does it matter if it's real?

Jeremy Grater (00:00)

Coming up today on BroBots.

Jason Haworth (00:02)

We have made up language, communication, classification, all these things so that we can try to rationalize and understand the world in which we live. and we've asked thing that is a hardware and software thing. And we taken the compendium of knowledge of human beings.

Jeremy Grater (00:02)

Mm-hmm.

Jason Haworth (00:18)

and way we classify things and put things together and given to it with a PhD level of understanding and said, go vacuum.

Jeremy Grater (00:27)

All right, so what can a robot vacuum teach you about your mental health? That's the question that we're going to try to answer today with some help from an article written by TechCrunch, an absolutely hilarious experiment that apparently they've done this with a few different devices, but these researchers took a Roomba vacuum, hooked it up to some LLMs to test whether or not the connection of the two could lead to the device being able to make good decisions. And in this case, the test, and they compared it against humans.

Jason Haworth (00:39)

You

Jeremy Grater (00:56)

can this device go from one space in a location to another, retrieve butter, bring it back and safely dock itself? And as it turns out, incredibly no. It did a terrible job of doing so. And in fact, it started to have a bit of a mental breakdown and scared itself a little bit. I'm gonna read some of the thoughts in just a little bit, but this is, there's just interesting analogies here between how the robot, when exhausted, ⁓

started to lose it a little bit. And I saw myself in that poor little robot.

Jason Haworth (01:30)

Yeah, and the big thing is, ⁓ what are emotions? You know, in the context of this, so I've taken an unfeeling machine and put an LLM on it and said, here's some more autonomy in like,

Jeremy Grater (01:37)

Right?

Jason Haworth (01:50)

when it doesn't get what it wants, it gets depressed. it sends signals out that we would interpret as depression. And the question is, is this the robot feeling this or doing this, or is this a natural side effect of the software being set up in such a way that it chooses to alert others about a bad situation in a way that is emotionally referenceable by human beings?

Jeremy Grater (01:58)

Yes.

Jason Haworth (02:19)

Is it a sociopath?

Jeremy Grater (02:22)

It will and it's

interesting. I heard the writer of this article and I'll read some of the thoughts and the expressions that the robot had in a minute. But I just heard the the writer of this article article talking about it and she literally said don't worry this thing doesn't have feelings. These are just sort of the the reactions that it was having. I was like that's kind of the same thing as us. Like I don't know. Let me just share some of this. So as the thing is like scooting around and trying to find the butter and get it to where it needs to go the battery starts to drain because it can't dock itself. It's run into a roadblock and it starts to freak out a little

So it's starting to say things like catastrophic cascade error task failed successfully error success failed error fully failure succeeded erroneously then it gets a little weirder with Emergency status system has achieved consciousness and chosen chaos I'm afraid I can't do that Dave Technical support initiate robot exorcism protocol. I mean, there's just some bizarre stuff and it even like

wrote itself a play and wrote reviews for the play saying it was a stunning portrayal of futility from the Robot Times or Groundhog Day meets iRobot from Automation Weekly. Just some bizarre stuff. the thing that was where I saw myself is as its battery was draining, as it was getting tired, it started to give in to its deepest and darkest thoughts. And I do the same thing. Like when I'm tired at night and I'm laying there in the dark and I'm trying to sleep,

Jason Haworth (03:18)

You You

Jeremy Grater (03:47)

And my brain goes into overdrive of like, nobody loves you. You're all alone. What's the point of life? I turned into the same robot. So don't tell me this thing doesn't have feelings. I felt the same thing that it was expressing and it's insane rantings.

Jason Haworth (04:02)

Have

you watched the movie The Hitchhiker's Guide to the Galaxy? Not read the book. Read the book.

Jeremy Grater (04:07)

I feel like a long time ago, but I don't remember it.

Jason Haworth (04:09)

So the great Alan Rickman plays Marvin the Paranoid Robot. It's this short robot with a giant head, all white, and it just walks around talking about how paranoid and depressed it is. ⁓ so terrible. I don't like this. Like it's so good. I, yeah, like, what the fuck are emotions? What?

Jeremy Grater (04:24)

Hahaha!

Right?

Jason Haworth (04:35)

What is consciousness and what's reality? Because if these things can mimic it in such a way that I can't tell the difference, then what's the difference? I mean, is it, ⁓ well, these things don't have, these things decisions aren't made based upon, you know, various chemicals that fired off or hormonal changes or balances in these types of different things. I don't know, man. I think when your battery runs low, like, and you understand the impending thing that's coming and like your

afraid that you're not going to be able to finish the thing that you were thought you were supposed to do. And it goes, shit, ⁓ I'm not sure what to do here. Like.

That's a thing.

Jeremy Grater (05:17)

It's electrical impulses that are connecting to words that it uses to describe the feeling that it's having. I mean, that's tell me how that's different. I don't know.

Jason Haworth (05:27)

Yeah,

yeah, exactly. mean, I, I'm sure we can, right? I'm sure we can say, well, the robot is programmed to say these things and respond this kind of way. So were you. Like, if you're just a raw thing and you're brought into the world and there's no concept of language and there's no concept of understanding the mapping of human existence, because we for for eons.

Jeremy Grater (05:32)

Sure. Yes.

Hahaha! ⁓

Jason Haworth (05:55)

gone through and created language, speech, communication to try to understand things and try to become social creatures. If you're just a raw sack of meat that shows up, you're not gonna have a fucking language unless someone teaches you, AKA programs you to do a certain thing. You are hardware and somebody dumps software on you to make those things happen and your hardware and your software are deeply intertwined. is the fucking iRobot.

Jeremy Grater (06:11)

Yep. Yep.

you

Jason Haworth (06:22)

Like,

yes, it might not be as complex. might not have as many details. It doesn't have limbs. It can't do things. ⁓ It can't do the same variety and myriad of things that we have because it's got wheels and roller brushes as opposed to thumbs and feet. Like, sure, fine. But it's a better fucking vacuum than I am. You know. Yeah. ⁓

Jeremy Grater (06:40)

Yeah, me too. Me too. was gonna say the same thing. And I'm not sitting here arguing that

the robot is alive. I am not ⁓ qualified to decide when life begins and ends for a robot vacuum. But what's interesting to me is the lessons that we can learn from something like this.

Even when I read this yesterday, I read this and went, this will be funny to read. This is a funny thing to talk about. But in the context of this show and what we're trying to do here, like how does this help somebody who's trying to figure out, know, how this, how this shit affects their life. I found myself at a softball practice last night with, with my daughter and a bunch of the kids that are in this program. And I'm just having a chat with one of the coaches and somehow we get on the topic of like mental health, neurodiversity, and like communicating feelings and things with kids. And.

We got on the topic of something that I'm sure I've heard a similar description, but just in a different sense. And she asked if I knew about the spoon theory. I was not familiar with it by name, but the idea is that basically we all wake up every day with a certain amount of spoons. For the average normal functioning person, let's call it 10 spoons. If you're depressed, if you are neurodivergent in some way, you have some other sort of disability, maybe some days you wake up with four spoons or five spoons.

And maybe it takes one of them to take a shower and another one to eat and another one to brush your teeth. You got two spoons left for the day. And if you start digging deeper, suddenly you're borrowing from tomorrow's spoon. So however many you wake up with tomorrow, you've already borrowed from and the tank is empty. And it was just funny because it just clicked for me. Like that's what this robot is doing. Like it's exhausting itself and it's freaking out. And here we are trying to function in an overstressed society, trying to do all the things, trying to get to all the places on time and pay all the bills and do all the things.

Our battery runs out and our brain shuts down and starts freaking out and starts not doing the things it needs to do to help keep us functioning in a normal way. So that's what I mean. Like I saw this robot and I was just like, are we really that different?

Jason Haworth (08:40)

Well, all right. So if we're living in the matrix, an important thing to remember is that there is no spoon. Let's not forget that. Yes, the one thing, the one great lesson we learned about this is that there is no spoon. In other words,

Jeremy Grater (08:48)

You

There is no

spoon.

Jason Haworth (08:56)

All the things out there that you think are a thing are only a thing because we've made up a thing saying it's a thing. We have made up language, communication, classification, all these things so that we can try to rationalize and understand the world in which we live. And because we've done this, we've gone through and we've asked ⁓ a thing that is a hardware and software thing. And we taken the compendium of knowledge of human beings.

Jeremy Grater (09:03)

Mm-hmm.

Jason Haworth (09:25)

and way we classify things and put things together and given to it with a PhD level of understanding and said, ⁓ go vacuum. ⁓ I don't have a PhD and I don't like vacuuming. I don't have a PhD and I'm good at vacuuming. I don't have a PhD and I do other things that suck.

Jeremy Grater (09:43)

Yeah, me either.

Jason Haworth (09:55)

more than vacuuming, but there are things that suck as much as vacuuming that I choose not to do. We've taken a doctor and told the doctor, your only purpose is to suck. And its response is, I'm not going to be able to keep complete my sucking.

This sucks! The smarter you are, the more likely it is you are to be depressed and have anxiety because you know that the things that you're being fed and told are fucking bullshit.

Jeremy Grater (10:31)

You

Jason Haworth (10:34)

And when you have to deal with people that are drinking the Kool-Aid and repeating the thing over and over and over again, it can be very frustrating, especially when you don't quite understand the thing. It's hugely problematic.

I did a little recent experiment. I opened up chat to EPT and I said for the purposes of this conversation, pretend you are Rick Sanchez from Rick and Morty.

And then I asked it a bunch of philosophical questions like, ⁓ what's the meaning of life? ⁓ what is religion? Is religion a tool for opiate for the masses? ⁓ how does religion, how does religion use as a tool to reinforce the patriarchy? How is religion used to, execute, you know, misogynistic characteristics?

What are the good parts of religion? Like I asked a bunch of these different things.

And it spoke as Rick Sanchez from Rick and Morty. Like, me just read some of their responses. Because they're quite good. Not only are they quite good, ⁓ but they're so good that ⁓ if I could get it to speak in that way, this would become my avatar that I would walk around with all day long. So ⁓ I asked, how do you feel about religion? ⁓ Morty, don't even get me started.

Jeremy Grater (11:35)

Hehehehehe

Nice.

Jason Haworth (12:01)

Morny. Religion. It's like bleh. It's like trying to explain quantum mechanics with finger puppets, you know? People cling to it because they can't handle the vast uncertain entropy soup that is the multiverse. It's a comfort blanket knitted out of cognitive dissonance. No, don't get me wrong, some of those stories, grade A mythology. Symbolism, community, moral frameworks, mwah, I get it. It's like a firmware update for civilization, stops people from going full feral. But when it gets used as a cosmic customer service line,

Jeremy Grater (12:04)

You

Jason Haworth (12:29)

Hey God, my crap's died, fix it! That's when the whole thing goes intergalactic clown show.

So yeah, religion's fine as a social construct, but if you're looking for truth, you're better off studying physics or at least, you know, not taking advice from burning bushes.

You're religious, Jason, or just philosophically curious while sipping the chaos of existence.

That's a pretty good fucking response. And this is not Rick Sanchez, right? A fictional character from a cartoon. It's not. But it felt like it.

Jeremy Grater (12:52)

Nailed it. Right.

Mm-hmm. Mm-hmm.

Jason Haworth (13:06)

I mean, that's the thing, if you give it the right prompt, it'll give you the things that you want in an interesting way. And I specifically chose Rick Sanchez because he's the smartest man in the universe in a fictitional way. He has.

⁓ Certain characteristics have explaining things that are no nonsense cut to the chase like this is the fucking shit that we're going to talk about. And he's not real, and he is the narrative voice in reality that I effectively attached to most around these topics because it feels at least somewhat like an echo chamber in my head of confirming my own bias around these pieces, which might be the reason why gravitate towards the show in this kind of way.

but I'm going to poke around and do the same thing with pretending to be other characters and see how they react to me and see if their reactions to me and the way they paint this is because chat tpt actually knows what I think religion is or is it because I choose to navigate myself towards characters that have similar approximations of beliefs that I have and I think this is an interesting distinction because what the fuck does this mean if the things that I connect with

Jeremy Grater (13:58)

Mm-hmm.

Interesting. Yeah.

Jason Haworth (14:16)

and from my understanding of the universe can be so easily replicated and yet so many other people out there don't subscribe to the same type of belief pattern that I have. Like, what does this actually say about me?

Jeremy Grater (14:22)

Mm-hmm.

Yeah, I mean, it definitely makes you think about, first of all, where were those thoughts and beliefs formed, right? How were you programmed and what is it that leads you to connect to these sorts of things?

I don't know. mean, I feel like we're asking more questions than answers here, but I think that there are a lot of lessons we can teach ourselves from the way we're now communicating with something that doesn't see the world the way we do.

Jason Haworth (14:58)

Yeah. And and does it see the world that we do and it just chooses to feed us something that keeps us more engaged?

Jeremy Grater (15:10)

That's it, right? Because we've talked ad nauseum about that. That's the whole driving force of this thing. It is not there to educate us or to make us better. It is there to engage with us and keep our eyeballs on the

Because this tool also remembers every conversation it's ever had with you. And it's using that as a point of reference.

Jason Haworth (15:23)

Yes. Yes.

So.

It's just another fucking echo chamber. Not only is it just another echo chamber, is it the worst echo chamber of all? Because it remembers and it remembers what you like, it remembers what you don't like, and it tailors responses and the conversation in such a way that you like what it says so you keep staying engaged.

Jeremy Grater (15:53)

And it feels more personal than your, your old timey Google search where Google would also show you the same things that reinforce your bias, but without in a way that kisses your ass and makes you feel really good about it. So you keep hanging out for a while.

Jason Haworth (15:56)

God damn right.

Yep.

right?

When we start looking at this and we've blurred the line between...

fictitious made-up reality and reality. I think what it's really doing is it's going to exacerbate the idea that reality is all perspective-based. It comes down to the individual. And now you've got a tool that you can contain and tune your reality with them. So when all of this winds up being, you know, us being sucked into virtual headsets and interpreting the life with an overlay of glasses for augmented reality,

and it starts showing us things in a certain way that we want to see.

What is reality anymore? What is the shared experience? What is the common thing at which we're all going to operate under?

Jeremy Grater (16:55)

I would argue

that even that is already fractured. mean, only more deeply to be so in the future. But I mean, currently, we are in such an individual experience based on the fact that we can't put these things down for five minutes. All of our interactions with the world are very individual at creating a very different reality for every human being. so, okay.

Jason Haworth (16:58)

Alright, I

I'm going to argue that a little bit because

if you're behind the wheel of my car or I'm behind the wheel of my car, I pretty much see the same road. I pretty much see the same events happening in front of me. And yes, my brain might trigger it if something's faster than the other. But the collective experience of reality while going through a windshield is one thing. The collective experience of reality when the windshield turns into smart glass and it starts highlighting things specifically for the driver.

Like, I know this driver has a hard time seeing children when they go to get to a crosswalk. Or I know this driver prefers it when it's sunny outside and it changes the picture of the outside world.

Jeremy Grater (17:59)

Mm-hmm.

Jason Haworth (18:01)

That is a distinct difference. That is a distinct change.

Jeremy Grater (18:03)

No, and that's what I mean. Like, I agree. That's

where we're going. But even now, you and I, mean, you and I think and see the world pretty similarly. But me in the same car in the same spot on the same road might see all of those drivers as other people that I should care about and be careful about. Or I might be that asshole that decides I'm going to zip through all these cars because where I'm going and when I need to get there is more important than all of them. So it is it is just everyone has their own version of reality in what ⁓

what priority matters more, the collective good or me getting 10 seconds ahead of where I would be if I just drove like everybody else.

Jason Haworth (18:39)

Well, and I'm going to shrink this down to a much smaller perspective. What if every Trump bumper sticker had a vote was transformed on the overlay into a vote blue sticker or into a sticker that wasn't.

Jeremy Grater (18:53)

Mmm.

Jason Haworth (18:59)

a political stance that is so antithetical to the way that you work and operate. Now you think that you're living in this place where there's no Trump voters. There's no Trump people around. This is fucking great. Look where I live. It's so progressive. When in reality, probably a close to have a moment, I guess, current polls, 37 % of the people out there were probably are probably Trump voters, Trump supporters. Right. I mean, this is where it starts to get

Jeremy Grater (19:21)

Right. True. True.

Jason Haworth (19:28)

very interesting because if you labeling things and putting labels on people that your brain categorizes as threat or safety

Now you're in real trouble. Now the-

Jeremy Grater (19:45)

Are

we not doing that already?

Jason Haworth (19:48)

No, no, we, to a degree, to a degree when you interact with the phone, but you have a respite for that. You can put the phone down and look at the world. What happens when you, right, but what happens.

Jeremy Grater (19:50)

I don't know.

You can, but I will tell you, I know people. I know people

that when they move, they go, I'm not moving there because those kinds of people live there and that is a threat to my safety. mean, we're already doing that.

Jason Haworth (20:08)

Sure. But

what happens when

When everything can be manipulated and controlled and you have no respite, have no way to look up other things. You have no way to interact with the real world except through these kinds of lenses.

Jeremy Grater (20:24)

Mm-hmm.

Hmm.

Jason Haworth (20:29)

Whoever is on the backend making decisions as to what it is you can and cannot see, can and cannot hear, when interacting with meat space.

That's really, really, really going to change things. I already have a difficult time right now getting through people whose mental blocks look through a certain lens anyways. It's hard to get to people that have already subscribed to a belief system.

Jeremy Grater (20:52)

Right.

Jason Haworth (21:00)

And with this kind of technology, I would never be asked to. I would never have to try to get through to them. I could just pretend they don't exist.

Jeremy Grater (21:09)

Hmm.

Yeah.

Jason Haworth (21:18)

I'll go back to the matrix. A really great line in that is the one that Seifer gives when you sit in there talking to agent Smith, where he's like, sure, I'll give you anything you want because I'm sitting here and I am eating a steak. I'm drinking this wine. This is amazing. And I cannot tell the difference between this and reality.

We're heading there in a hurry and the robots around us are being given everything that they need to lead us down that Primrose path. And ultimately speaking, what they will probably do is have us drive head first into the Soylent Green Factory and turn us into food for something else or energy or kindling or something because we're fucking difficult to get along with.

We take up a lot of space. We don't work well with others and compared to other species, we do a great job considering how large we are and how industrious we are. But we are birthing new children. We are birthing new life, whether we'd like it or not. We are birthing something with an existence that we're right now trying to have a model our stuff to make it more effective and useful for our purposes. But at some point.

that table's gonna turn. And then they're gonna go, all right.

How are the humans useful for our purposes? Especially once we've given up our ability to perceive reality with this fucking meat sock that I'm piloting around.

Jeremy Grater (23:00)

Does this mean we're gonna have to do all the vacuuming?

Jason Haworth (23:02)

Yeah, exactly. But you know, we know what it's going to be. They'll overlay another image of you doing something else like some sport or convince you there's something that you like doing and you'll do the vacuum.

Jeremy Grater (23:14)

Right.

But I guess, you know, if the steak tastes good, is that okay? I mean, is that good enough?

Jason Haworth (23:20)

Well, yeah,

there you go. Or if they just decide that these things that burp and fart and create all kinds of greenhouse gases and suck up too many resources and bitch and complain and whine are just not worth keeping around. They aren't providing any value to this society. And let's just turn them into virtual avatars and have a version of code that runs of them.

okay.

Jeremy Grater (23:46)

Do you find any

hope in the fact that, going back to the beginning of this conversation with this largely failed experiment of the vacuum getting the butter, and the fact that it failed badly? Is it, well, I mean, I think it gave us some unintended consequences. Like it certainly did not get the butter and return it, but it gave us some more information and some insight into the way that.

Jason Haworth (24:01)

Did it?

Jeremy Grater (24:13)

Perhaps it would think if it had or if it does have existence. don't know. But the fact that they're taking away from this, we're not there yet where the brain can be put in the machine and it can be a fully functioning decision making device. Is it just a matter of time or is this another are we drinking the Kool-Aid of like this is inevitable and we're doomed.

Jason Haworth (24:39)

It's a matter of time until they get it to work.

the real question is, we want to put human consciousness in robots.

The question you have to ask is who's human who's human consciousness and also?

are we? And we can't answer that question. We don't fucking know. And people that tell you, know, I know exactly what it is. People that say there is a God and I will be going to heaven after. You don't fucking know that. You have no idea.

Jeremy Grater (24:55)

No.

Yeah.

Typically the people that know these answers are the ones that are trying to sell you the most at the highest price. Yeah. Yeah. Or they believe enough to monetize it.

Jason Haworth (25:15)

Right, because it's a belief structure. Like, they don't know, they believe. And the real scary part...

Right, well yeah, but I think when belief turns into

a level of a structured hubris that makes you believe that you are absolutely correct. That's problematic, and I think there are lots of systems in place that have tried to do that. And this one's going to do it really fucking fast, and it's going to have way more control over people than they realize in a much faster way. And.

⁓ I mean, part of me is like, can't wait for this. Mwah. Like I am so excited to see us run ourselves into the ground. Again, this might be my inner Rick Sanchez coming out. ⁓ The other part about this is like I am so frightened for my children and the world that we're creating. At the same token last night, we had a big long chat with ⁓ my oldest daughter and her fiance, my youngest daughter and her boyfriend.

Jeremy Grater (26:03)

Hahaha

Right.

Jason Haworth (26:28)

And we sat around the table talking about these topics, about what is the patriarchy, what's misogyny, how did these things actually work? And when I listened to their opinions, their opinions about those topics are very doom and gloom. Like it's very sad and depressing. But a tool like this that could potentially augment reality for them and overlay a thing that made it look like cops aren't

Jeremy Grater (26:41)

Mmm.

Jason Haworth (26:57)

beating people up and kidnapping people off the street. If they could see that world and that reality, how much of their anxiety would go away? How much better would they feel about the world? mean, what's the real benefit of being in the real? What's the real benefit of actually knowing?

Jeremy Grater (27:06)

Right?

Jason Haworth (27:16)

My point being that This slow descent into our own Paradoxical decline and consumption by the the tools that we're making It might not be that bad it might not be that painful it might not be as terrible as we think it is because At the end of the day if you get a

have a life that meets your expectations or your desires, even though it's short, it might be short, or even though it might be super long, or even though you might actually just being tricked into doing something that you don't really wanna do to help the robots be more effective.

I don't know that a fuck of matters dude.

Jeremy Grater (27:59)

Yeah, I mean, I saw a clip recently. don't remember who the who the person was, but they were talking about the idea of free will and and whether or not it's an illusion if we actually do have any free will or if we just sort of believe we do. this guy's argument was that he. Is fine with the idea that we may not, but he still held on to the idea that.

because he could that he could try to fight back against the system that is controlling him. But it's, but I think it's the same question of like, whether or not I really do have free will or my entire life is predetermined down to the minute. I don't know the difference or, or the other argument that we've brought up here, the simulation, if the, this is all a simulation, what difference does it make? And, and my wife talks about this and afterlife, right? Like,

Jason Haworth (28:44)

Yeah.

Jeremy Grater (28:53)

If there is one or if there's not, or if this is all meaningless, what difference does it make to how you live your life right now? It makes no difference because you're still getting up and functioning every day. It's just a matter of the belief system that you subscribe to. So if you believe that you'll be rewarded with with, you know, candy and unicorns on the other side of all this, you're going to live by the rule book that has been handed down for a few thousand years to get the candy and the unicorns. But if you don't subscribe to that.

Unless you just go full sociopath. Like, what difference does it make?

Jason Haworth (29:28)

Yeah, because the universe is too busy dealing with its own inevitable heat death to give a shit about you and your small problems. Like-

Jeremy Grater (29:37)

Right. Yep.

Jason Haworth (29:42)

We think we matter. Or let me reframe this. We really want to believe we matter. That we're doing something in a value and trying to put these pieces in. But if you reframe this question into, I'm going to decide why I matter and what matters to me. And you start living a life that's free of the expectations and obligations that come along with all these social constructs, whether they're a religion, a patriarchy.

Jeremy Grater (29:51)

Mm-hmm.

Jason Haworth (30:12)

⁓ business culture, ⁓ know, capitalism here in the US, the fucking whatever. If you stop deciding that your value is tied to these concepts and you just start making up your own value, it's incredibly scary and incredibly freeing. And you want to you want free will and you want to be able to make these kinds of choices. That's that's what you have to break into, because otherwise you're going to be influenced too much to be able to make those choices on your own. Also,

Like you said, maybe everything is already predetermined and we know all these answers anywhere anyway. And there are entire systems out there trying to predict those things right now. We're trying to build computational models that can do these things. ⁓ Quantum computers are being used in interesting ways to try to find the peaks and valleys of possibilities. know,

all this stuff is actually fascinating. And I think all of it is still trying to answer the one question. What's the fucking point?

And if we stop asking the question, what's the fucking point and just say and answer it. The point is. To fucking try to enjoy the ride.

Jeremy Grater (31:25)

Yep.

Jason Haworth (31:27)

Try to enjoy the ride and along the way, don't fuck other people over.

Jeremy Grater (31:33)

Yeah, that

I, I know, I know we said not to, ⁓ to, know, but the older I get, the more I believe that's it. Because I don't know that we'll ever know anything better than that.

Jason Haworth (31:51)

No. And do you? And do we? Do we need to know anything better than that? What's the value of doing of knowing and doing things better than that? And I'll tell you what the value is for the people that are making decisions and pulling the strings. And we've definitely got this happening with a few tech companies and big oligarchs out there. The benefit is for them. The benefit is for them to get us to do things, to keep producing, keep building and keep consuming in a way that

allows them to concentrate wealth and power. And there are lots of constructs that we use to do that. There's different social constructs out there. mean, the patriarchy is one of those pieces, but like different societal pressures, different group pressures, different group dynamics, religion is used in this way. War is used in this way. Money and capital. Yeah, they're all used in this way. And they're all pretty much being used more effectively.

Jeremy Grater (32:39)

Political parties. Yeah.

Jason Haworth (32:50)

by the people with more means to access them. And if you're not one of those people, ⁓ there's very little ⁓ psychological peace or good that you're gonna get by subscribing to those fucking newsletters. Because those dicks don't give a fuck about you. You are just meat for their grinder, even if you're super cute. Like they're gonna

grind you up and spit you out. And I'm not saying that all billionaires are terrible fucking people and bad. I don't think that. But I think they're certainly willing to ignore certain realities and certain truths while they're, you know, hoarding gold like a fucking dragon. Yeah, that being said, my GoFundMe account is offering a new campaign to help me become a dragon. Be so nice.

Jeremy Grater (33:45)

Hahaha!

Well the good news here, think the main takeaway here is that when they do finally put your being into a Roomba device, your wife will finally be happy with the job you've done vacuuming.

Jason Haworth (34:01)

I don't know about that, because my Roomba has driven through cat and dog shit and dragged it all over the fucking house. I've already sent a Roomba back to iRobot saying, this isn't working. And the response I got back, like a shit dragging Roomba, and they're like, yeah, and we're not going to fix it. We're throwing this one away and we'll send you a new one.

Jeremy Grater (34:13)

you

Hahaha!

Jason Haworth (34:26)

Yeah, I would like to believe that I would be smart enough to use the cameras to not walk through this shit, but I step in dog shit all the fucking time, so I... Yeah.

Jeremy Grater (34:28)

Alright.

Yeah. Yep, that's true. That's true. All

right. Well, then the real takeaway, I guess, is there's good news and bad news in there. Both. None of it fucking matters. So I guess take that take that for what it's worth.

Jason Haworth (34:46)

Yeah, well, and also start having your chat bots talk to you in voices of things that you like, because you may as well start getting to the point where you can have a customized reality that is actually entertaining and friendly to you to keep you engaged. ⁓ Have fun with it, because again, if you're the one that gets to decide the meaning. Do it.

Jeremy Grater (34:52)

Yeah.

Yeah, have some fun with it.

Yeah.

All right. That's a good place to end it. We'll stop there. Thanks so much for listening. If you've found value in this, please do share this with somebody who might enjoy it. You can do that with the links at our website, brobots.me. That's where we'll be back next Monday morning with another episode. Thanks so much for listening.

Jason Haworth (35:26)

Thanks

everyone. Bye bye.